The not-so-subtle irony of SRE Weekly is that sreweekly.com itself is really not very reliable at all[1]. It’s a little t3a.micro instance held together with duct tape and shell scripts. There’s no monitoring. It runs out of disk space constantly and I don’t find out until my email goes suspiciously quiet. The only thing going for it is that it’s well-patched.

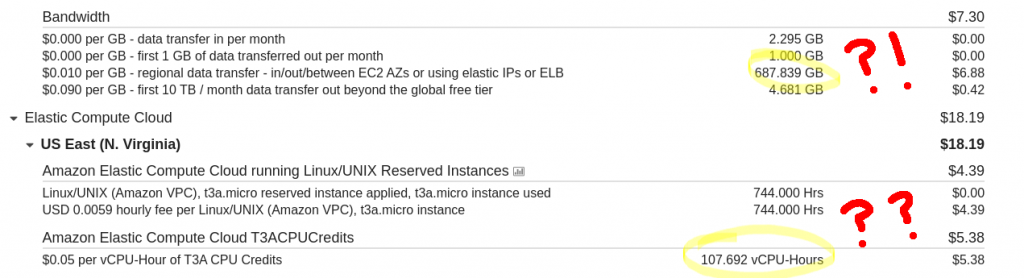

Last month, I had my @quinnypig moment. My AWS bill arrived and it was double what it usually is. Crap! Thank goodness twice a pittance is still a pittance, but I was curious, so I dug in a little. Hilarity ensued.

The reason for the high (for me) bill was immediately clear: the better part of a terabyte of data transfer out, plus some T3 Unlimited CPU credits. The meager free Cloudwatch metrics showed that my instance had indeed pegged its CPU and data transfer out for a week, going back to normal just a bit over two weeks prior.

Aw crap. Did I just get rooted?! Was my little instance being used to mine bitcoins or serve porn? Or mine porn? Now stuff was getting serious.

I quickly ran chkrootkit and it came up clean, not that that proves much. I was promptly reminded that I only keep 2 weeks of Apache logs to save disk space. No problem, I can just load up one of my EBS snapshot backups and look at its logs. My backups. The ones that totally exist. They exist, right?! Dammit. My backup script had quietly started failing several months back. Of course it had.

I really had nothing to go on at this point. Everything was back to normal, but who’s to say this wouldn’t happen again? Maybe someone still had their tendrils hooked in and they were just waiting for me to resume my negligence?

Okay, I decided, I guess it’s finally time for some monitoring. I set up a few (free) Cloudwatch alerts on bandwidth and CPU usage and the like, shrugged, and reluctantly moved on.

Last Thursday, the alert fired. Same exact symptoms. I hopped in and checked the Apache log:

54.209.27.31 - lex [27/Aug/2020:06:48:39 +0000] "POST /tt-rss/api/ HTTP/1.1" 200 1648

54.209.27.31 – lex [27/Aug/2020:06:48:39 +0000] “POST /tt-rss/api/ HTTP/1.1″ 200 545

There it was, over and over again. Tons of those. Obviously someone’s trying to brute-force my RSS reader’s API! And that IP resolves to an EC2 instance. Awesome! Case closed, add an iptables rule, beef up fail2ban, and start filling out an AWS abuse report.

Oh, they want to know what instance is being attacked, I should give them my IP address.

$ host sreweekly.com

sreweekly.com has address 54.209.27.31

…….. oh. I see.

Thankfully, I hadn’t finished submitting that abuse report. That could have been awkward.

Time to remove that iptables rule. Now what the heck am I doing to myself?! I had a sinking suspicion…

A little background: I gather a lot of articles for the newsletter through a series of Google search alerts that I’ve fine-tuned over the years, and I subscribe to the alerts using my RSS reader.

The thing is, when something like Facebook goes down, everyone and their pet rock writes a news story about it. They all have almost identical headlines. I use a hacked up version of this nifty little script to sift out the duplicates:

It uses my RSS reader’s API to manage articles. And sure enough, right there in the config file, it’s going out and coming back in through the front door, to make TLS easier:

[ttrss]

hostname=https://www.lexneva.name/lex/tt-rss

This little script has run smoothly for years, but now it was throwing exceptions. A lot of them. The main loop catches errors, prints them out, and tries again. Fast. It’s really good at it. Trust me.

I changed the code to print a full traceback (and sleep a couple seconds!) and started it back up. Sure enough, it spewed:

Traceback (most recent call last):

File “/home/lex/repos/newsdedup/newsdedup.py”, line 220, in

main()

File “/home/lex/repos/newsdedup/newsdedup.py”, line 215, in main

learn_last_read(rss_api, title_queue, args, configuration)

File “/home/lex/repos/newsdedup/newsdedup.py”, line 72, in learn_last_read

limit=limit, skip=seen)

File “/home/lex/.pyenv/versions/3.7.3/lib/python3.7/site-packages/ttrss/client.py”, line 459, in headli$

return self._client.get_headlines(feed_id=self.id, **kwargs)

File “/home/lex/.pyenv/versions/3.7.3/lib/python3.7/site-packages/ttrss/client.py”, line 222, in get_headlines

return [Headline(hl, self) for hl in r[’content’]]

File “/home/lex/.pyenv/versions/3.7.3/lib/python3.7/site-packages/ttrss/client.py”, line 222, in

return [Headline(hl, self) for hl in r[’content’]]

File “/home/lex/.pyenv/versions/3.7.3/lib/python3.7/site-packages/ttrss/client.py”, line 481, in init

self.updated = datetime.fromtimestamp(self.updated)

ValueError: year 0 is out of range

Wat.

I was fully expecting a bug in newsdedup. I definitely wasn’t expecting that a simple ttrss API cliet function call would throw this kind of error. WTF.

I did a little googling for that error and a little digging in the code, and I started to wonder… just what kind of timestamp value (seconds since the epoch) would result in a year of 0?! I asked the database:

mysql> use ttrss;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from ttrss_entries order by updated asc limit 10 \G

And there was my answer. One of my feeds had 4 entries dated Mon, 01 Jan 0001 00:00:00 +0000. AD 0001, stroke of midnight, literally the moment Jesus was conceived, or took his first breath, or whatever the heck, these blog posts were penned. A Monday, as it turns out.

Some more background (I’m sorry it’ll be quick!): when I find one or two good articles by a given author, I often will drop their blog’s RSS feed into my reader in case they write anything good in the future. Even if I only care about 1 in 10 things they write going forward, that’s still a way better signal-to-noise ratio than I get from Google search alerts. And some of you folks are way higher than 10%, thank you!!

I’m guessing this AD 0001 date is a way of making a post appear way down at the bottom of the very last page in someone’s blog archive. Totally reasonable. I’d probably do the same. And yet, my poor little script was barfing, and it wasn’t even something I could fix with my own code. I’d have to fix the ttrss Python library.

So to the person whose RSS feed triggered this: I’m sorry. I had to unsubscribe. It’s totally not your fault. I think my Google alerts will probably catch anything new you write, but if not, definitely drop me a note. Sorry!

And in the end, I’m still not sure where that “year 0” error came from. The best I can figure is that it was trying to render the time in my local time zone (despite my server being in UTC!?), and since I’m over here in one of the negative time zones, it blew up.

And in case you’re curious, the timestamp for 0001-01-01 00:00:00 UTC is -62135596800.

[1] Please don’t DDoS sreweekly.com. Please! It’s not funny and you’ll just make me sad. ♥

Comments are closed here.